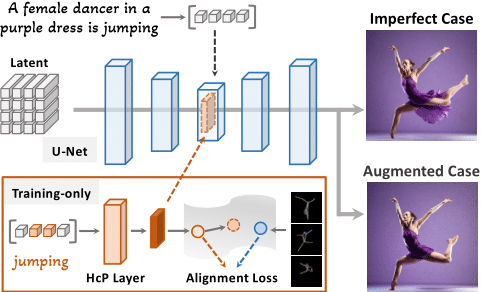

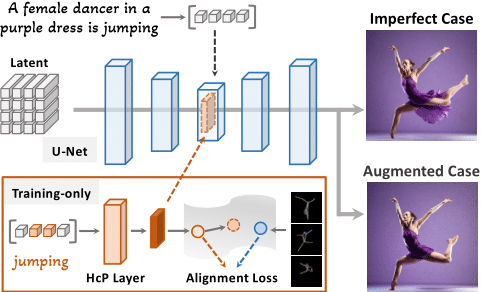

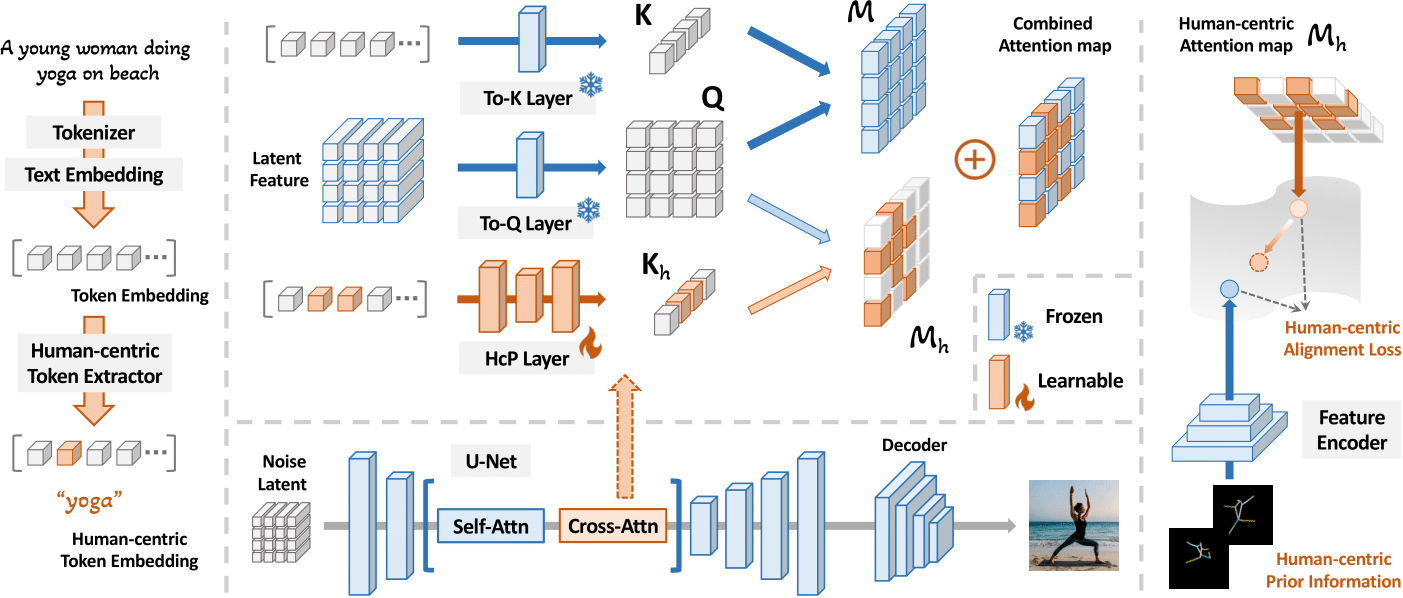

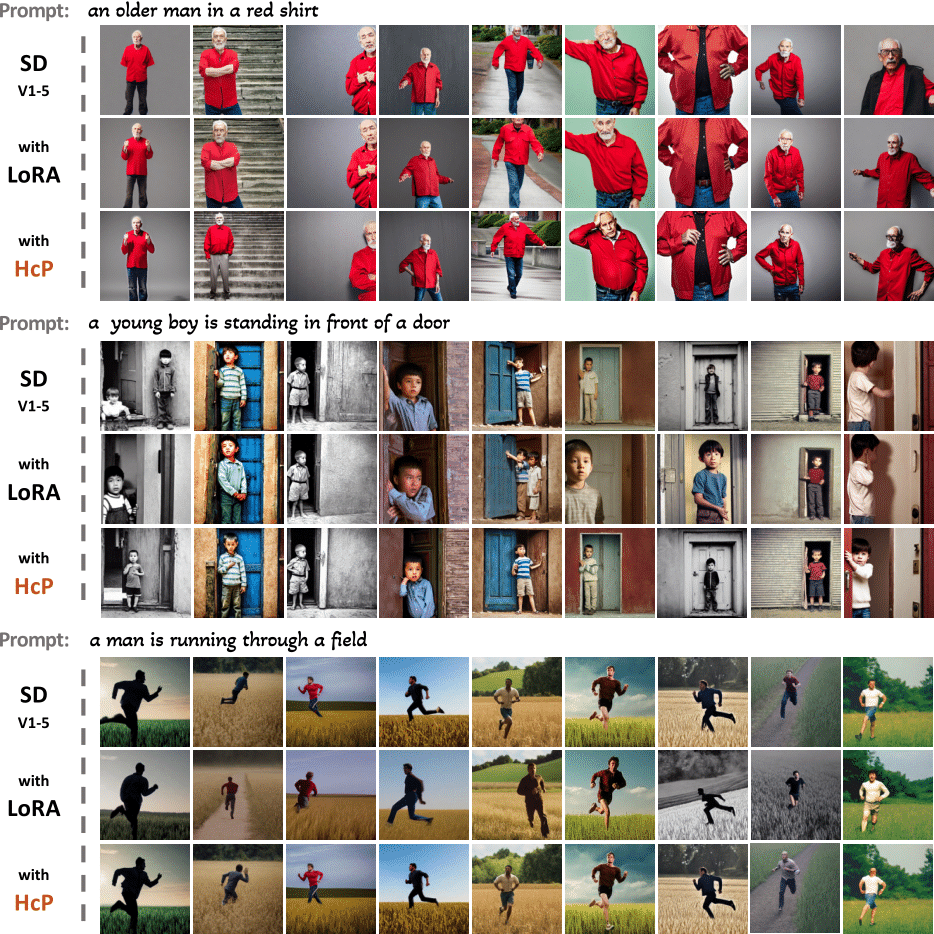

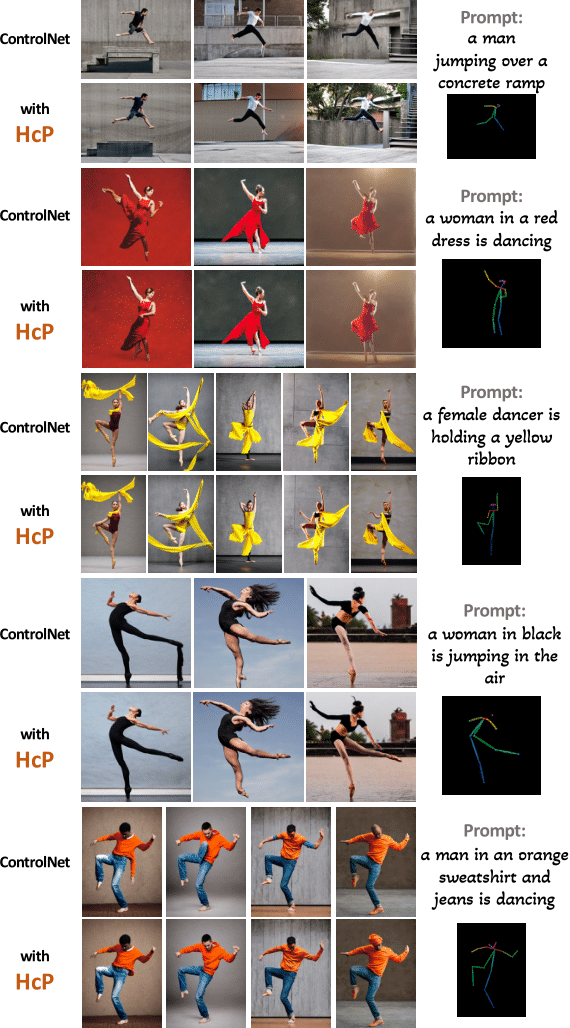

Vanilla text-to-image diffusion models struggle with generating accurate human images, commonly resulting in imperfect anatomies such as unnatural postures or disproportionate limbs. Existing methods address this issue mostly by fine-tuning the model with extra images or adding additional controls --- human-centric priors such as pose or depth maps --- during the image generation phase. This paper explores the integration of these human-centric priors directly into the model fine-tuning stage, essentially eliminating the need for extra conditions at the inference stage. We realize this idea by proposing a human-centric alignment loss to strengthen human-related information from the textual prompts within the cross-attention maps. To ensure semantic detail richness and human structural accuracy during fine-tuning, we introduce scale-aware and step-wise constraints within the diffusion process, according to an in-depth analysis of the cross-attention layer. Extensive experiments show that our method largely improves over state-of-the-art text-to-image models to synthesize high-quality human images based on user-written prompts.

@inproceedings{chen2023beyond,

title={Towards Effective Usage of Human-Centric Priors in Diffusion Models for Text-based Human Image Generation},

author={Junyan Wang and Zhenhong Sun and Zhiyu Tan and Xuanbai Chen and Weihua Chen and Hao Li and Cheng Zhang and Yang Song},

booktitle={The IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024},

}Name: Junyan Wang Email: wjyau666@gmail.com